Importance of Big Data Testing for data quality Management

What is big data?

To understand big data testing, it is important to understand big data. Big data is high in volume with a high influx and is considered the biggest asset of firms nowadays. This data is the core element which makes it possible to;

- Innovate new ideas,

- Enhance business reporting,

- Enhance decision making,

- Automate complex machine learning and data vision products.

A study at Deloitte revealed 62% of companies use big data to make business insights. Users are constantly providing their data to the public forums such as by using Google services, Facebook, apple cloud, and the rest of such platforms. Not to anyone’s surprise, Facebook generates 4 PETBYTE of data every day which is the biggest thing companies are looking for. Companies need data to make insightful analysis and better decisions.

The easiest way to understand what big data is to take its literal meaning – big data. Large amount of data which can be used for generating useful insights. Facebook Inc. has around 2 billion users who actively put out their data. Even if a user is not uploading anything, they are uploading packets on the server and sharing their locations. All this data is collected and structured to be used. That’s why Facebook is a massive hit.

The collected data can be in 3 forms;

- Structured,

- Unstructured, and/or

- Semi-structured.

As the name suggests they differ by the way data can be turned into information. Let’s look deeper.

The first one, structured data, is organized and retrieval is easy. Easy retrieval means that simple database queries can be used to fetch the data. The data is in form of enterprise-level databases, databases, warehouses, and CRMs.

Then the unstructured data means that data is not tabular, it can be files, informational packets, or streams. The most common examples are GPS location streams, voice messages, and/or any analog data.

Lastly, semi-structured data is which is not fetchable by simple queries but holds the potential after some processing. Often these contain metadata or tags such as CSV, XML, or JavaScript object notation files.

What is Big Data Testing?

Bigdata applications have to be tested on a scale larger than the rest. This process is called bigdata testing. In cases where there are large data sets with do not comply with conventional and straightforward computations then this method comes in handy. Bigdata testing involves dedicated tools, frameworks with clear steps to create, store, retrieve, and analyze data on remarkable sizes such a big volume, velocity, and variety.

These steps have to be strategically planned to understand the correct characteristics of the data.

The 5 main characteristics are;

What are Data Processing Strategies?

As this is a strategic task, the strategy has to be planned. Strategy decides the processing techniques so it is interlinked with data processing. Data processing can be of 3 types;

- batch,

- real-time and

- iterative.

The task of big data processing is done by data engineers, and big data testing is performed by QA engineers. For data quality testing, DQM tools (data quality management) comes in handy.

Many sides of big data demand to be tested. These areas make up entire testing categories on their own. For example, functional testing, performance testing, database testing, and infrastructural testing. E-commerce is also an area where big data plays a big role. E-commerce sites such as Alibaba, Amazon, Wish, Flipcart have huge traffics and can infer a humungous amount of data and sensory inputs every minute.

What Are Common Big Data Testing Strategies?

We discuss four main testing strategies; data ingestion testing, data processing testing, data storage testing, and data migration testing.

1.Data Ingestion Testing is the testing on multiple sources of data such as files, logs, sensors, uploads. The main reason for this testing is to verify the loading and extraction is complete or not. Data has to be ingested according to the schema without any corruption/loss. This is done by taking portions of the data and comparing results.

2. Data Processing Testing is the testing of aggregated data. It is made sure that business logic is intact and input and outputs correlate. More is explained in the “What are data processing strategies?” section above.

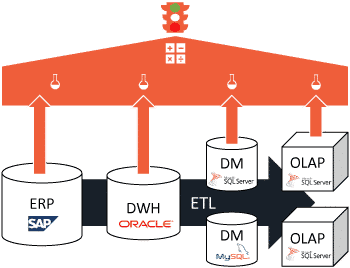

3. Data Storage Testing is the verification of the output and its correct loading in the data warehouse.

4. Data Migration Testing is the testing after the application moves from one server to another. This may happen to organizational demands, technological changes, or business management instructions. New and old systems are compared and contrasted. There are three major types of migration testings; pre-migration, during migration, and post-migration. All configurations are tested as well and functionalities are compared as well.

What are the major database testing activities?

Three major actitives are done to perform successful database testing. All have one thing in common that they are all validation processes.

1. Validation # 1 - Data Staging: Validation of input data.

2. Validation # 2 - Process Validation: Validation of processed data.

3. Validation # 3 - Output Validation: Validation of data stored in the data warehouse.

What is the performance testing approach in big data applications?

For a layman, projects involving big data are the ones that process large data quickly. This demands processing power and a smooth flow of data. The architecture of any big data tool needs to be fully designed out for error-free operations. That is why performance testing is important.

Key aspects of performance testing are related to memory utilization, response rates, and application availability. Performance should be a priority when building and using big data-based applications. Jobs and standard procedures make use of routines and later test them to use big data useful and manageable.

What is the functional testing approach in big data applications?

For a layman, functional testing involves the testing of the front-end using the requirement document. Functional testing focuses on comparing the results of the application and validation of the data. Similar to functional testing of normal software applications, big data applications testing is the same.

BLOG

Our Experts Latest Articles

Mastering Test Automation: A Game-Changer for Software Quality and Efficiency

Data Product Testing Bottlenecks: How to Achieve Massive Scaling the Easy Way

How to Get Budget and Executive Buy-In to Improve Data Quality

Costs for Quality Assurance and Testing in Data Product Projects

Webinar – Overcoming Data Challenges in Insurance Compliance

From Data Challenges to Data Excellence – The Role of QA and Test Automation

The Yin and Yang of Data Quality Metrics: Balancing Accuracy and Completeness

Exploring the Impact of Data Reliability on Decision-Making Processes

Do the first step! Get in touch with BiG EVAL...