DATA QUALITY MANAGEMENT

Achieving Business Agility with BiG EVAL's Integration Capabilities

This article explores how BiG EVAL's integration capabilities can help modern organizations achieve greater business agility by streamlining data validation and testing workflows, automating subsequent actions, and improving collaboration and communication between teams.

Introduction

Why is business agility important for modern organizations

Business agility is the ability of an organization to rapidly and effectively respond to changes in the market, customer needs, and competitive pressures. Business agility is characterized by business agility value the ability to quickly adapt to changing conditions, innovate, and pivot to new strategies when necessary.

In today's rapidly-evolving business environment, business agility has become an essential characteristic for modern organizations. The pace of change has accelerated, and companies that cannot respond quickly to market shifts, changing customer needs, and disruptive innovations risk falling behind their competitors. Business agility enables organizations to stay ahead of the curve by enabling them to quickly identify and respond to emerging trends and opportunities, and to pivot their strategies in response to changing conditions. This, in turn, enables organizations to make innovative business solutions that achieve greater customer satisfaction, innovation, and growth, while maintaining a competitive edge in the marketplace.

What is BiG EVAL

BiG EVAL is a software tool designed to help businesses and organizations validate the accuracy and quality of their data. It provides automated testing and validation capabilities for data pipelines, data warehouses, and business intelligence systems. BiG EVAL is a valuable tool for any organization that wants to ensure that their data is reliable, accurate, and of high quality what is important to achieve true business agility.

One of the key strengths of BiG EVAL is its integration capabilities. BiG EVAL can be easily integrated with other applications and processes, enabling organizations to streamline their data validation and testing workflows, and automate subsequent actions to address data quality issues. This enables organizations to achieve greater business agility by responding quickly to emerging trends and opportunities, and by making better-informed decisions based on reliable, accurate data.

The Challenges of Ensuring Data Quality

The importance of data quality in achieving business agility

Data quality is a critical factor in achieving business agility. In today's data-driven business environment, the accuracy, reliability, and completeness of data are essential for making informed decisions, identifying new opportunities, and responding quickly to emerging trends.

The importance of data quality can be seen in several ways. Firstly, data quality is essential for making informed decisions. Inaccurate or incomplete data can lead to incorrect conclusions and poor decision-making, which can be costly for businesses.

Secondly, data quality is essential for using market changes and identifying new opportunities. Modern organizations are constantly looking for new opportunities to grow and expand their operations. To do so effectively, they need to be able to analyze large amounts of data quickly and accurately to identify emerging trends and new opportunities. This requires high-quality data that is accurate, reliable, and comprehensive, so that organizations can make the most of every opportunity that comes their way.

In summary, data quality is essential for achieving business agility for modern organizations and agile businesses. By ensuring the accuracy, reliability, and completeness of their data, organizations can position themselves for success in today's rapidly-evolving business environment.

The challenges of ensuring data quality in modern organizations

As data volumes continue to grow and the complexity of data environments increases, organizations face a number of challenges in ensuring that their data is accurate, reliable, and complete. Here are the top five challenges of ensuring data quality in modern organizations:

- Data complexity: As data environments become more complex, it becomes more difficult to ensure that data is accurate, reliable, and complete. Modern organizations often have multiple sources of data, distributed systems, and complex data pipelines, which can make it difficult to maintain data quality.

- Lack of data governance: Without a robust data governance framework in place, it can be difficult to ensure that data quality standards are consistently applied across the organization. In addition, a lack of clear ownership and accountability for data quality can lead to confusion and inconsistencies in data management practices.

- Data silos: When data is siloed across different departments and systems, it can be difficult to ensure that data quality standards are consistent across the organization. This can lead to inconsistencies in data management practices and make it difficult to identify and resolve data quality issues.

- Data security and privacy: Data security and privacy are critical concerns for modern organizations, and can make it difficult to ensure data quality. Security measures such as encryption and access controls can sometimes interfere with data quality validation and testing processes.

- Resource constraints: Ensuring data quality requires time, resources, and expertise. However, many organizations may not have the necessary resources or expertise to maintain data quality, leading to incomplete or inaccurate data.

BiG EVAL's Integration Capabilities

An overview of BiG EVAL's integration capabilities

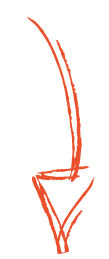

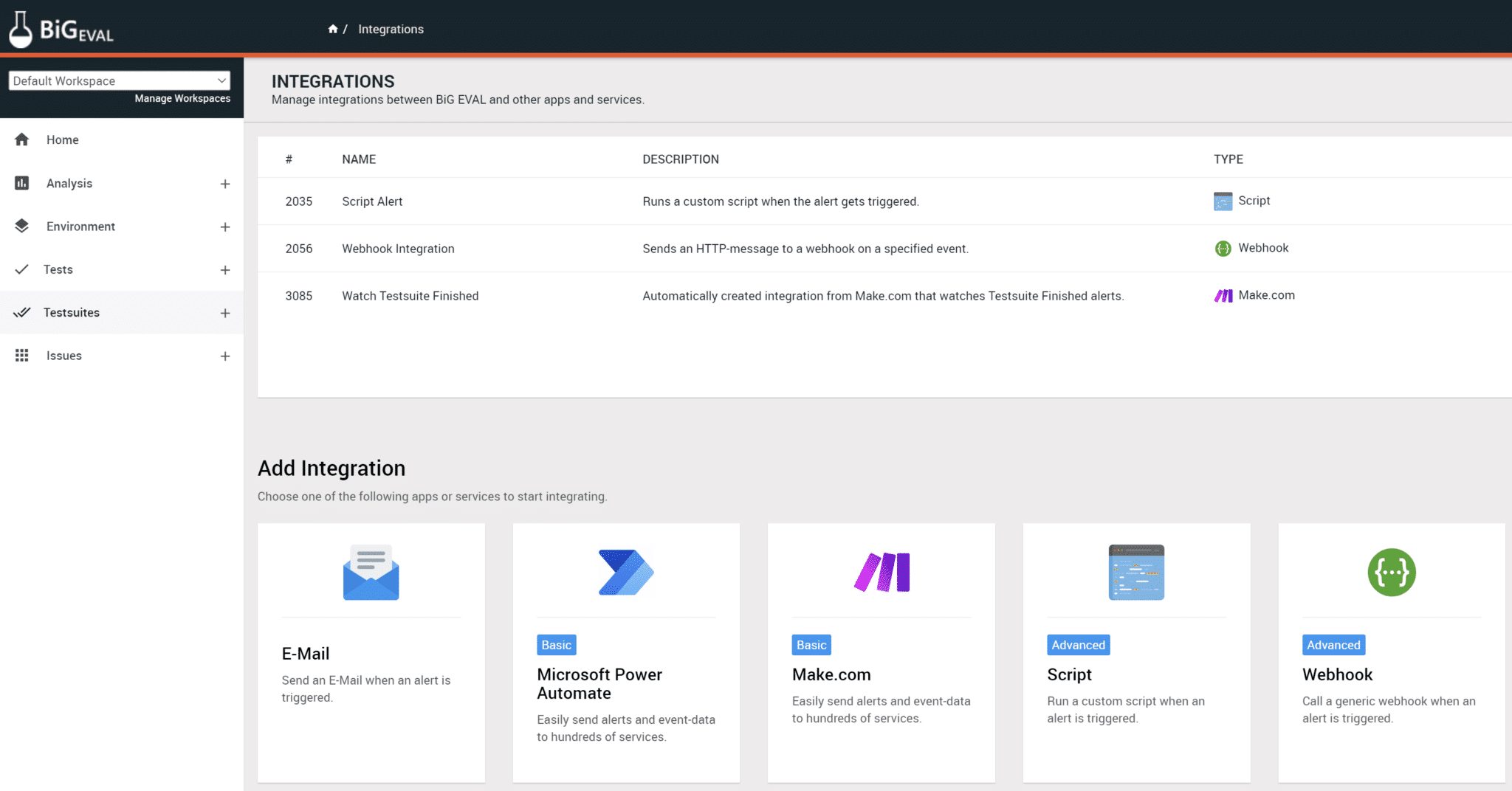

BiG EVAL's integration capabilities enable organizations to achieve greater business agility by streamlining data validation and testing workflows and automating subsequent actions to address data quality issues. There are two main scenarios for integrating BiG EVAL with foreign applications or processes.

The first scenario BiG EVAL supports is integration with other applications and processes to trigger data validation and test runs within BiG EVAL when they are needed. Depending on the validation results, the applications and processes can behave differently.

A data integration process for example could stop loading erroneous data into a data warehouse or business application. Or a continuous integration process in software development could stop deploying erroneous new software components to end-users before they harm business processes.

The second scenario is to trigger subsequent actions in foreign applications or processes when data quality issues are detected by BiG EVAL. This means that organizations can issue service desk tickets, inform stakeholders, restart integration-processes, or start actions to fix the data quality issues. By automating subsequent actions, organizations can improve issue resolution times, minimize the impact of data quality issues, and ensure that data quality standards are consistently maintained across the organization.

The Benefits of BiG EVAL's Integration Capabilities

BiG EVAL's integration capabilities provide a range of benefits for modern organizations.

Improved collaboration and communication between different teams and stakeholders

Seamless communication and collaboration between teams helps to identify and resolve data quality issues faster, ensures everyone has access to accurate data, and improves decision-making. This leads to increased innovation, productivity, and profitability for team members and the organization.

Faster identification and resolution of data quality issues

Faster identification of data quality issues and the source of the problem can provide several benefits for modern organizations. It enables organizations to quickly and proactively address data quality issues before they become larger problems, minimizing the impact on the entire organization. By identifying the source of the problem quickly, organizations can also prevent similar issues from occurring in the future, improving the overall quality of their data.

Cost savings through automation and reduced manual intervention

Addressing data quality issues early can provide significant cost savings for modern organizations that strive for business agility. By proactively identifying and resolving data quality issues, organizations can minimize the risk of errors and inconsistencies in data management practices, leading to fewer costly mistakes and better business outcomes.

Additionally, early identification of data quality issues can prevent larger, more costly problems from arising in the future, reducing the need for expensive remediation efforts down the line.

Overall, addressing data quality issues early can help organizations to a competitive advantage and save time, money, and resources, positioning them for success in today's competitive business environment.

How does BiG EVAL integrates with foreign applications

While the business benefits of BiG EVAL's integration capabilities are clear, it is important to understand how they work from a technical perspective.

Triggering Data Validation and Test Runs within BiG EVAL

Applications and processes such as Azure DevOps, data integration processes, and workflows can use BiG EVAL's secure REST API to trigger data validation and regression tests. BiG EVAL also provides a PowerShell module and plugins into most common tools that make this task a breeze.

This technique enables organizations to seamlessly integrate BiG EVAL's validation and testing capabilities into existing workflows without changing a lot.

By triggering data validation and regression tests through BiG EVAL's REST API, organizations can automate the testing process and ensure that data quality standards are consistently maintained across the organization.

Triggering Subsequent Actions in the case of quality issues

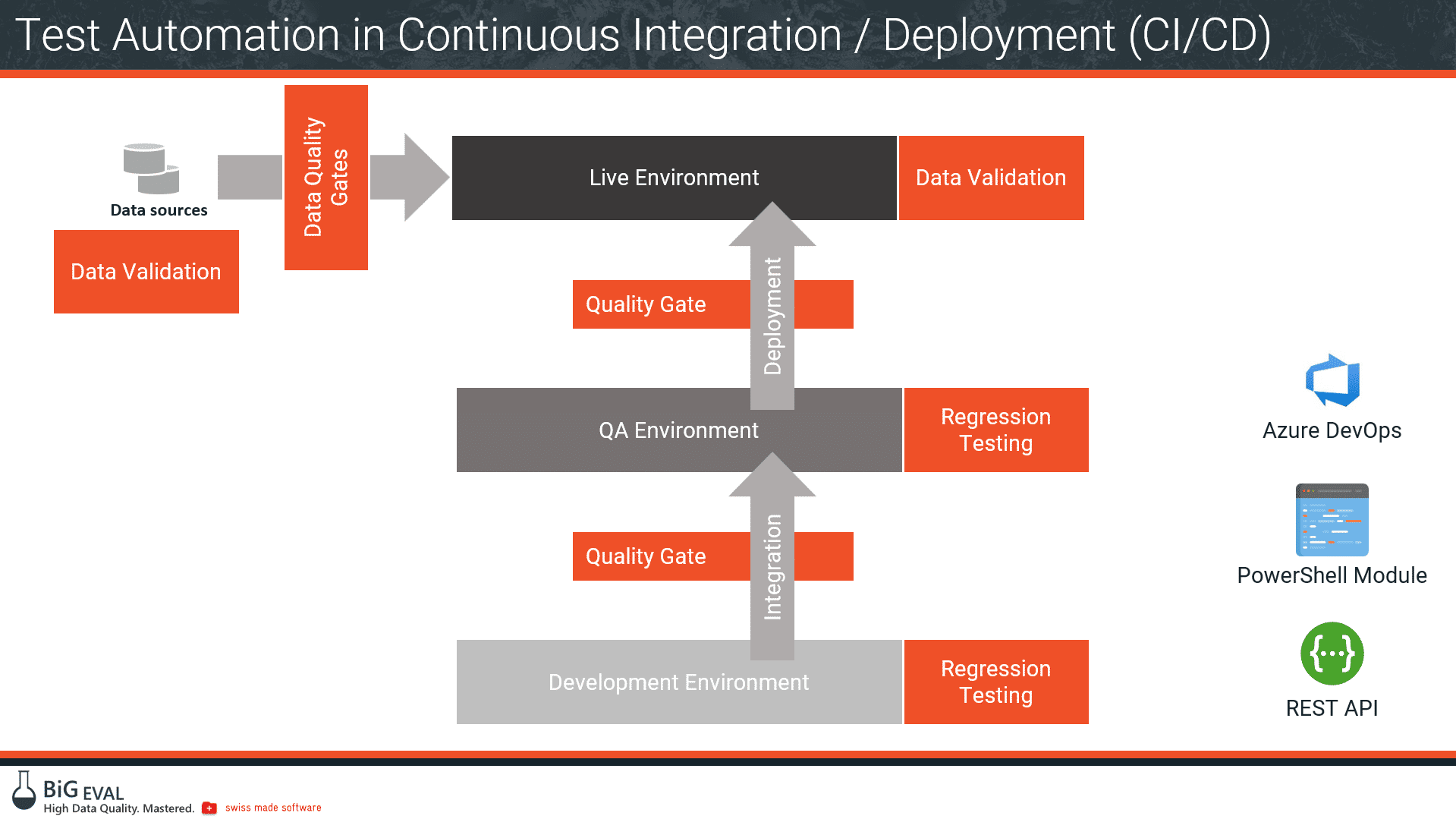

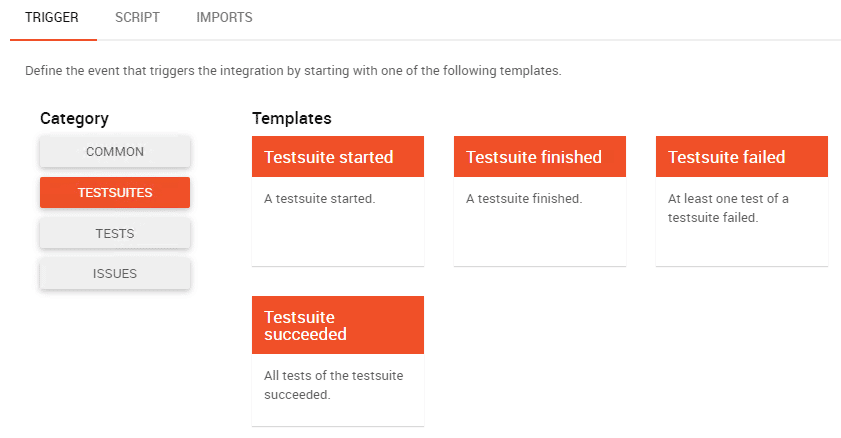

BiG EVAL's integration capabilities enable organizations to trigger subsequent actions when data quality events, such as the detection of a data quality issue, occur. In such an event, BiG EVAL routes the data quality event including basic information about the problem to integrated applications or processes.

The visual editor allows to set up these routes easily within just a couple of minutes. The receiving applications or processes may then use the basic information or query more detailed reports from BiG EVAL to initiate any actions such as creating a help desk ticket within Jira (or any other tool), running a PowerShell script or custom code or business logic.

Technical Integrations

At the time of writing this article, BiG EVAL comes with the following technical integrations. The team around BiG EVAL strives to add technical integrations with each new release.

- Email: BiG EVAL sends Email-notifications to developers, data stewards, helpdesk technicians or any other email recipient. This allows to react on any data issues as soon as they come up.

- Webhooks: BiG EVAL triggers an HTTP-Webhook and provides data validation results to that webhook. A webhook is usually part of a business application and triggers actions within that application.

- Cloud Automation Providers: BiG EVAL triggers a workflow in Microsoft Power Automate, Zapier or Make.com. These services integrate with thousands of other applications and allow to build complex workflows if needed.

- Script: Developers provide a script (C# or PowerShell) that BiG EVAL runs in the case of a data quality event. These actions could be simple things like copying files into quarantine, restarting a data load process or also rebooting a server. A lot of administrative tasks can be automated that way.

How to test BiG EVAL's data validation and integration capabilities?

To experience the benefits of BiG EVAL's data validation and integration capabilities, you can take advantage of a free 14-day trial of the tool.

During the trial, you can test BiG EVAL's capabilities with your own data sets that you can use to run validation and regression tests against.

The trial also enables you to test BiG EVAL's integration capabilities by connecting to other applications and processes to trigger data validation and testing workflows and automate subsequent actions when data quality issues are detected.

You can see firsthand how the tool can help achieving greater business agility and maintain data quality standards across the organization.

To start the free trial, sign up for an account today.

Conclusion: The Importance of Business Agility in Today's Environment

In today's rapidly-evolving business environment, data quality is critical for achieving business agility and success. Organizations must be able to maintain data quality standards across the organization, quickly identify and resolve data quality issues, and make better-informed decisions based on accurate, reliable data.

BiG EVAL's integration capabilities enable organizations to achieve these goals by seamlessly integrating data validation and testing workflows into their existing processes, automating subsequent actions when data quality issues are detected, and providing real-time insights into data quality issues and trends.

By leveraging BiG EVAL's integration capabilities, organizations can improve collaboration and communication between different teams and stakeholders, reduce costs through automation and reduced manual intervention, and drive strategic growth and success through better-informed decision-making.

To experience the benefits of BiG EVAL's integration capabilities firsthand, you can sign up for a free 14-day trial of the tool today.

Attention Data Architects!

FREE MASTER CLASS

MASTER CLASS

Business Intelligence Architects Secrets

How To Automate Your Data Testing and Fix Errors Within Minutes...

Without Wasting Time and Money Building Your Own Solution

VIDEO about

The Datawarehouse Test Concept