Data Quality Management

Why Automatic Data Cleaning Is Like Putting Out a Fire With Gasoline

Learn why correcting and cleaning data at its source is better than using automatic tools. Our blog post explains how to make your organization's data trustworthy and reliable.

Accurate data is crucial in any data integration process, whether for a data warehouse, data analytics system, or any other purpose. However, data is often full of errors, inconsistencies, and other issues that can cause problems if not addressed.

This is where data cleaning comes in. Data cleaning is identifying and correcting data errors and inconsistencies to make it more accurate and reliable. It is a crucial step in any data integration process, ensuring that the data in use is high quality.

There are many ways to clean data, and one of the most tempting is to use an automatic data-cleaning tool. These tools quickly and easily fix errors in data, making them an appealing option for busy data professionals. But is this the best approach?

Automatic data cleaning tools are a valuable part of any data integration process, but they should not be your only approach. While these tools can identify common errors in your data and make quick fixes, they often do not address more complex issues, such as inconsistent formats or values that don't make sense compared to other data points. It may seem like a quick and easy fix, but it can cause more problems than it solves.

This blog post will explore the risks and drawbacks of using automatic data cleaning in a data integration process. We'll also outline why it is better to correct your data at its source – and explain how effective governance can help you with that task.

The Risks and Drawbacks of Automatic Data Cleaning

While automatic data cleaning tools may seem appealing for busy data professionals, there are several risks and drawbacks to using them in a data integration process. These include:

- Automatic data cleaning can introduce errors into the data: Misapplying the data cleaning rules can introduce errors into the data that were not present. This can lead to even less accurate and reliable data than before the cleaning process.

- Automatic data cleaning can be time-consuming and costly: Setting up and maintaining automated data cleansing rules requires significant time and resources. This can be particularly problematic for smaller organizations that may need more resources to devote to data cleaning. Additionally, if the data cleaning rules need to be updated or changed, it can be time-consuming to make those changes and apply them correctly.

- Relying too heavily on automatic data cleaning tools can be risky: While these tools can be helpful in certain situations, it is essential to remember that they are only tools. They are not replacements for good data governance practices and should not be relied upon to fix all data errors. Instead, it is generally more reliable and better to correct data at its source to fix the root cause of errors. This ensures that the data is accurate and reliable from the start, rather than trying to fix mistakes later on.

- Lack of flexibility: Automatic data cleaning tools may not be able to handle complex data cleaning tasks or cases where data needs cleaning in a specific way. This can limit the flexibility of the data cleaning process and make it challenging to clean data in a way that meets the organization's particular needs.

- Lack of transparency: Automatic data cleaning tools may not explain why the tool corrected specific data errors or how the cleaning process happens. This lack of transparency makes it difficult to understand and ensure the data cleaning process is correct. It can also make it harder to trace the source of errors or inconsistencies if discovered later on.

While automatic data cleaning can be tempting as a way to fix errors in a data integration process quickly, it is generally not a good idea. It is better to correct data at its source to fix the root cause of errors, ensuring that the data is accurate and reliable from the start.

This will help ensure that there are no data errors that need cleaning. It also makes it easier to trace the source of errors should they appear later on, which can help you identify and correct the cause more quickly.

The Benefits of Correcting Data at Its Source

Data cleaning is a simple task that anyone can do with the right tools, but it's best to correct data at its source. Doing so will avoid many of the pitfalls associated with data integration and ensure that your data is always accurate and reliable. Here are some of the potential benefits of correcting data at its source:

Ensure accuracy and reliability: By correcting data at its source, you can be sure that the data in your processes is of high quality—you won't have to deal with bad records once they're already in. This can help to avoid problems down the line, such as incorrect analysis or reports based on faulty data.

Fixing the root cause of errors: By identifying and correcting errors at their source, you can prevent those errors from being introduced into your data in the first place. This can save significant time and resources that you would otherwise spend trying to fix mistakes introduced during the automatic cleaning.

Avoid the high cost of data integration: By ensuring that your data is clean and in a usable format before you integrate it with other data sources, you can avoid the high costs associated with spending time and money on data integration later. For example, suppose you already have clean data when building your database. In that case, there's no need to spend extra time cleaning up bad records after importing them into your system.

Identifying error sources is easier: By identifying the potential error sources in your data, you can better understand what to look for when fixing errors. For example, if you know that only certain types of records are likely to have issues, then checking these records first will save time and effort in your overall data quality efforts.

Greater control: Correcting data at its source allows you to control the data quality in your data integration process. By identifying and correcting errors at their source, you can ensure that the data in use is high quality and meets your organization's specific needs.

Improved productivity: When you integrate your data with fewer errors, it's easier for your business users to get the information they need. This can improve their productivity and save valuable time.

Improved customer experience: When you can provide quality data to your customers, it can enhance their experience with your organization. This can lead to greater loyalty and retention, which is essential in today's competitive marketplace.

Improved data governance: Good data governance practices are crucial for ensuring that your data is accurate and reliable. By correcting data at its source, you can ensure that your data governance processes are effective and that your data is adequately managed and maintained. This can prevent errors and improve the overall quality of your data.

While automatic data cleaning tools can be helpful in certain situations, they are generally not a replacement for good data governance practices and the process of correcting data at its source. You can ensure that it is high quality and ready for use in your data integration process by taking the time to update data at its source.

The Role of Data Governance in Data Correction

As we mentioned earlier, correcting data at its source is a reliable and effective way to ensure that the data you are using in a data integration process is accurate and reliable. However, it is essential to have good data governance processes to do this effectively.

Data governance is the process of managing and maintaining the quality of data within an organization. It involves establishing policies, procedures, and standards for data management and following those policies. Data governance processes are crucial for curating and fixing data, providing a framework for identifying and correcting errors and inconsistencies.

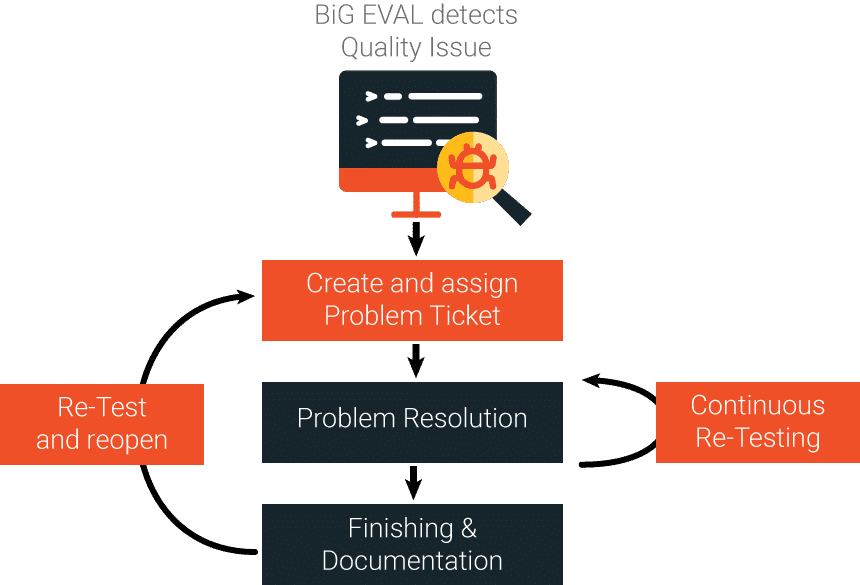

One way to seamlessly integrate data quality validation with data governance processes is to use a tool like BiG EVAL.

For example, suppose a client using an automatic data cleaning tool is struggling to maintain the accuracy of their data in their data warehouse. In that case, they can use BiG EVAL to do a quick data quality analysis on their primary and secondary keys. If the tool shows missing values or duplicates records, it's clear that the client needs to establish a new set of policies and procedures for managing this information.

BiG EVAL automates its data validation process and seamlessly integrates it with its data governance processes. This allowed them to quickly and easily identify and correct errors at their source, significantly improving the accuracy and reliability of their data.

BiG EVAL can also help improve data governance in the context of data migrations. If a client struggles to ensure the quality of their data during a migration process, using BiG EVAL will help them to identify and correct errors before they occur, ensuring that their data is clean and ready for use.

blog agile

BiG EVAL can also help improve data governance in the context of mergers and acquisitions. When two companies come together, it's essential to identify differences in how they manage their data and address them quickly. BiG EVAL will help companies identify the differences in their data and create a plan to address them.

And it`s easy:

In the BiG EVAL GALLERY are a lot of test cases and data validation rules that can directly be applied to your enterprise data.

Conclusion

Using automatic data cleaning in a data integration process is generally not a good idea. While these tools may seem appealing as a quick fix for errors, they come with several risks and drawbacks. Automatic data cleaning can introduce errors in the data, it can be time-consuming and costly, and it can be risky to rely too heavily on these tools.

Instead, it is better to correct data at its source to fix the root cause of errors. This ensures that the data is accurate and reliable from the start and can help to avoid problems down the line. Correcting data at its source also has many other benefits, including improved efficiency, accuracy, greater control, and enhanced data governance.

If you want to improve the accuracy and reliability of your data and ensure that your data governance processes are effective, consider using a tool like BiG EVAL. BiG EVAL automates data validation tasks and integrates seamlessly with data governance processes to help ensure the quality of your data.

Contact us today to learn more about BiG EVAL or to get started on a personal demo or 14-day trial.

Attention Data Architects!

FREE MASTER CLASS

MASTER CLASS

Business Intelligence Architects Secrets

How To Automate Your Data Testing and Fix Errors Within Minutes...

Without Wasting Time and Money Building Your Own Solution

Do the first step! Get in touch with BiG EVAL...

BLOG