Automated DATA ANALYTICS Testing

Power up End-to-End Data Validation with a Technology-Agnostic Solution

End-to-end testing for data analytics components with a technology-agnostic tool is crucial to provide the most benefits to business.

Testing a data warehouse and its data integration processes is a crucial step in building a reliable data analytics system. Despite of owning even the most sophisticated data analytics and integration technology, you cannot simply “forget” data validation testing and data quality monitoring as there are many sources of quality issues that do not depend on the technology you use.

Why Data Analytics Technologies cannot eliminate data validation testing

Many data technology vendors tell you, that you don’t need any testing processes if you rely on their unique technology. They prove their opinion by presenting you high sophisticated features that make it simpler and more stable integrating data into their data bases.

Sure, complex technologies are the source for many issues that harm your data quality. And reducing the complexity minimizes the impact of that factor. But there are so many more reasons why you may have data quality issues.

- Data Input quality issues

People tend to make mistakes when entering information into their computer. They even misuse input fields for things they weren’t intended for. - Data Modelling Issues

The concept of the analytical data model that gets built may be simply incorrect. It may doesn’t correctly derive from your business model, or it doesn’t reflect the source data needs. - Wrong understanding of information and data models

Each vendor of operational systems stores data differently. Often it needs a deep understanding of their data models to interpret and handle exported data correctly, even if a data model documentation isn’t available.

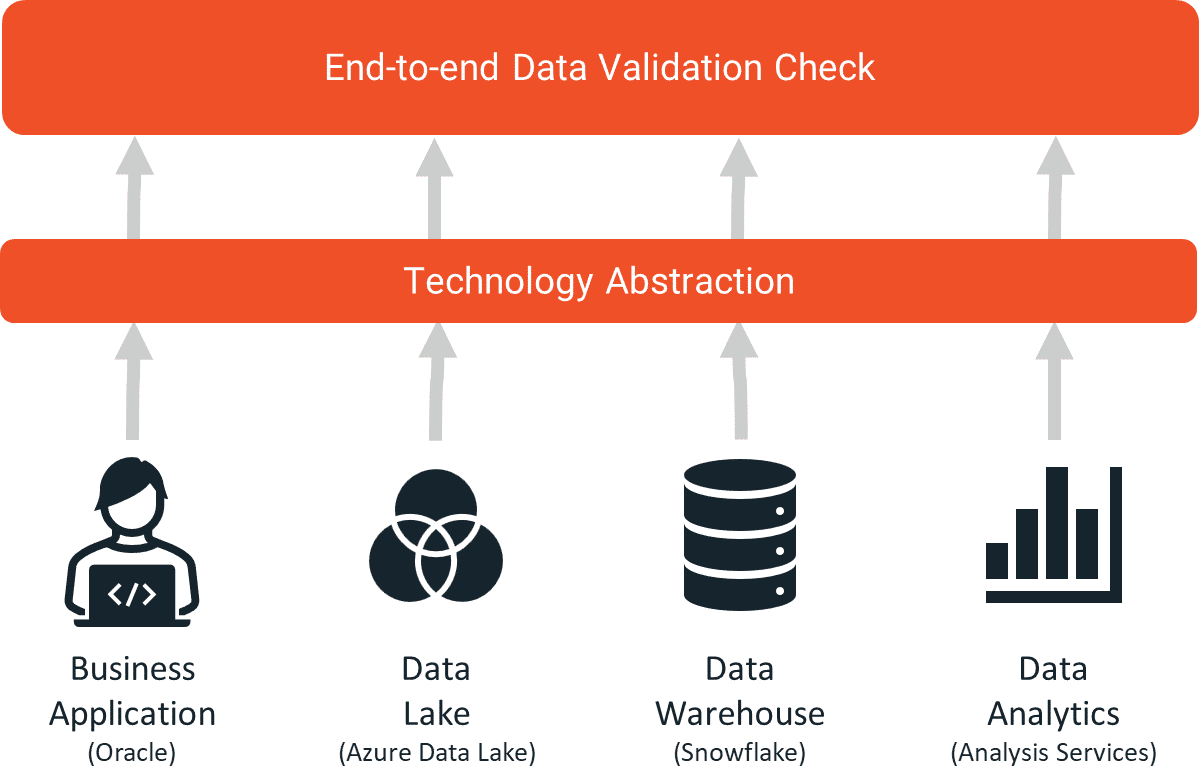

Technology agnostic tools

When it comes to end-to-end data validation, a technology agnostic approach leads to success. Data validation checks that test the integrity and correctness of information and that test the correct implementation of data modelling concepts, bring the most benefits. To name just two of these concepts, I’d like to mention a slowly changing dimension or maybe a PIT-table in a data vault model that need to work correctly. It doesn’t matter which technology is used to implement these concepts, they need to work correct and smooth. And that needs to be tested during the whole release-cycle.

If you intend to buy a standard product for end-to-end data validation (build or buy a data validation tool), you should give the technology independency of the tool a lot of importance. A software solution like BiG EVAL for example comes with a technology abstraction layer that allows validating data independently from their source technology. It transforms all technology-specific characteristics of data into a neutral view what allows mixing and matching data from different technologies within the very same data validation check. For example, you may reconcile data from you SaaS-CRM with your data lake, your Snowflake data warehouse and with a Microsoft SQL Server Analysis Services tabular model at the same time. This is powerful, as you don’t need to worry about data-type conversions, encoding, connectivity and much more.

Inter-System Data Validation

What also comes with a technology-independent data validation solution, is a way to do inter-system data validations. This is crucial for data quality validation. Either during the runtime of data integration processes or also in preparation of advanced data analytics or data science scenarios.

Many data validation checks are only beneficial if actual information can be validated against a reference value. For example, you may validate a list of monthly revenues that was calculated in the data analytics model against a list of target-values provided by the financial controlling department or even against a list provided by the financial accounting system.

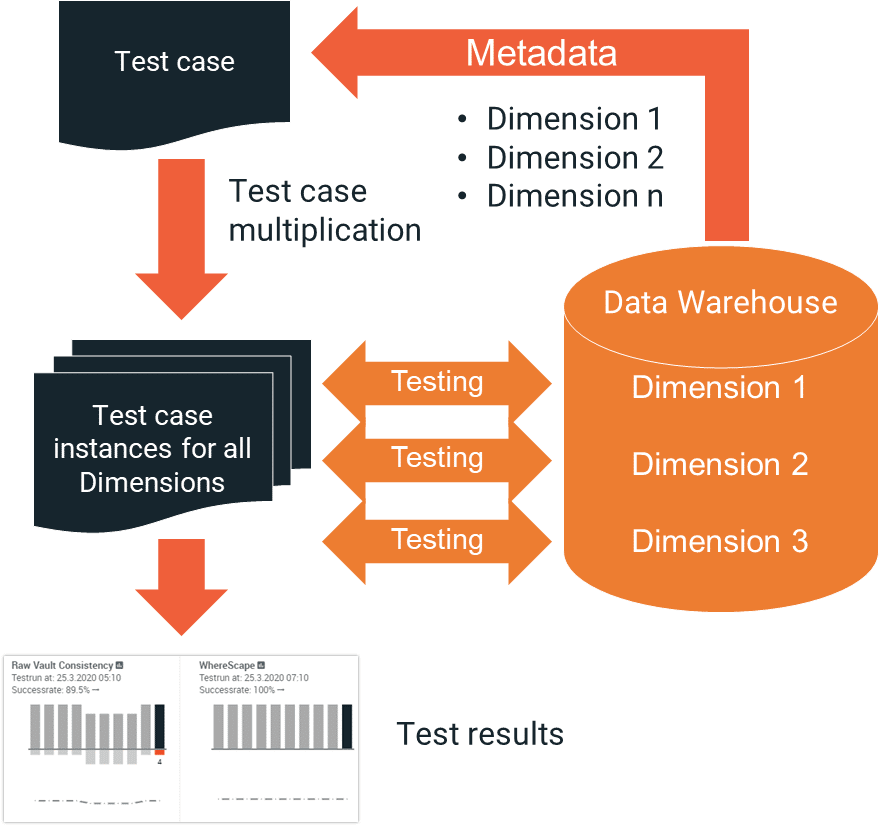

This kind of data validation is difficult to do with conventional tools. Especially if you need to avoid complexity and strive for standardization.Meta-data-driven data validation

As data architects usually strive for standardization in their data models, the same patterns are used repeatedly but for different data entities. Building the same validation checks for each of these entities or duplicating existing ones isn’t efficient and a pain from a maintenance perspective. So leading data validation tools come with features that can automatically scale validation checks onto other entities with the same modelling patterns. Information about the structure of data – also called meta data – that can be sourced from different data storage technologies build the foundation for massive scaling features of tools like BiG EVAL. One scenario could be, that BiG EVAL automatically detects all dimension-tables that implement a specific historization pattern. A test case that checks if that pattern works correctly can then be applied to all these dimensions automatically.

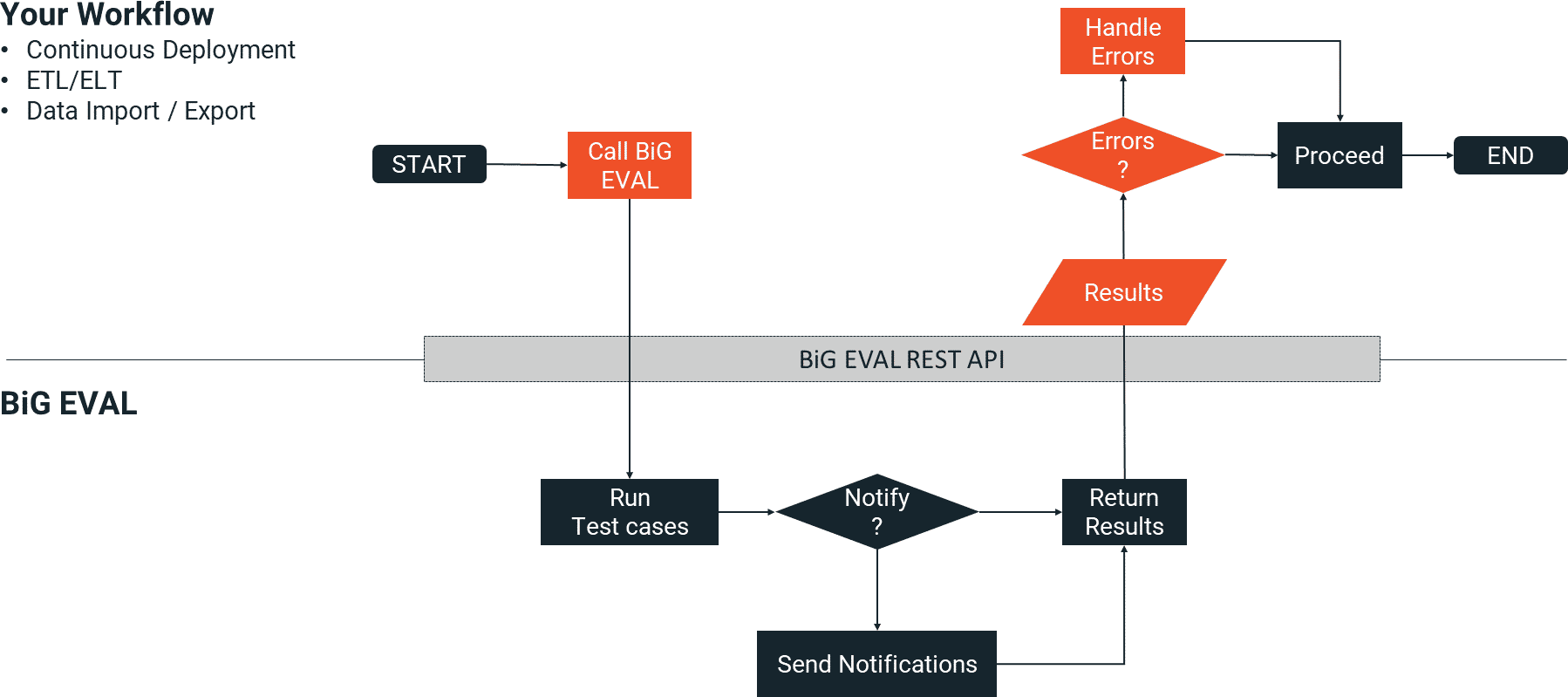

Data Validation Orchestration

Assuming you now have a couple of data validation checks that are beneficial for your business. It now comes to running them periodically and getting notifications about detected issues as quickly as possible. You may run them using a conventional task scheduler. But it gets even more interesting and beneficial, if you can embed data validation checks right into your technical processes. Or also within business processes that are controlled by workflow technologies. Imagine you could validate important data your process is dependent on, before the process starts. Erroneous data wouldn’t flow into a data warehouse where it could only be removed or fixed going the hard way. And wrong information wouldn’t harm the efficiency of your business processes as it wouldn’t break the process or force people to do some work again.

A data validation tool like BiG EVAL offers this kind of integration and controllability of the data validation process. Embedding data validation tests into your workflows or technical processes is simple and existing processes do not need to be changed extensively. It’s just a simple API-call – also simplified by a PowerShell module – that runs the needed validation tests. Depending on the outcome, you may force the process to continue or to take any specific action if erroneous data gets detected.

Conclusion

Data Validation is an important task for every data analytics or any other data-driven project. Despite of the promise data-storage vendors give, their tools do not replace data validation or render it unnecessary at all. Technology-agnostic data validation concepts of data validation solutions like BiG EVAL make the process simple and smooth as they come with powerful features to do inter-system and massively scaling data validations what builds the foundation for beneficial and efficient checks.FREE eBook

Successfully Implementing

Data Quality Improvements

This free eBook unveils one of the most important secrets of successful data quality improvement projects.